Lessons building on Genesys Cloud's live voice transcription

Genesys Cloud's Notifications API makes streaming a live transcript from an agent's UI very easy indeed. But the implementation you choose can vary significantly depending on your use-case. In this article, I thought it might be interesting to share such an implementation, and the decisions I made along the way…

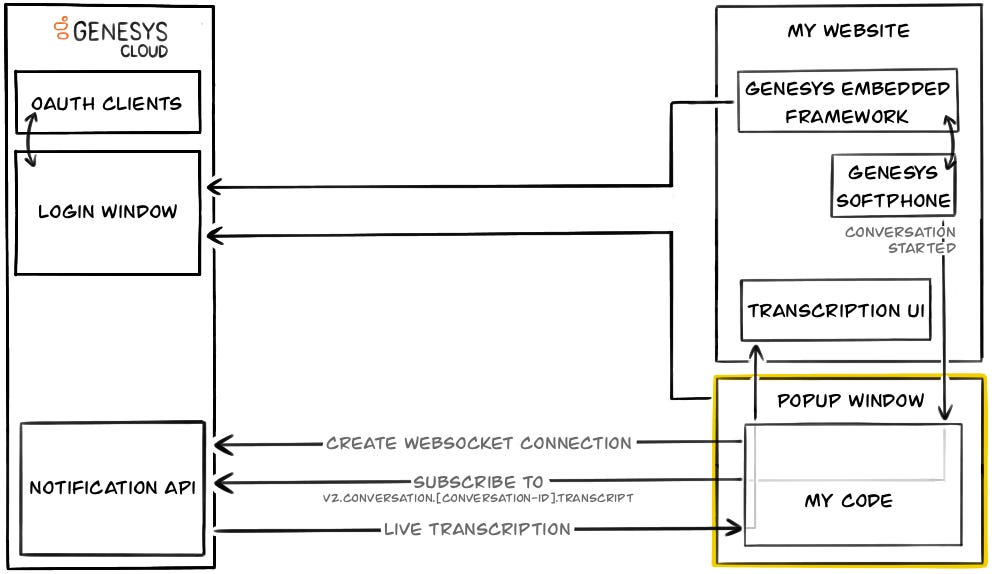

I set out to add a real-time transcript (which was enriched in real-time too) into a UI that already had a Genesys Cloud softphone, backed by Genesys’s Embedded Framework.

It's worth mentioning that although Agent Assist displays a live transcript, you cannot enrich it within the UI e.g. tracking real-time sentiment, personalised entity extraction, etc. This was one of the reasons I built a custom implementation.

So, the first question was how I was going to access the transcript...

Approaches to accessing a real-time transcript

Genesys Cloud has two methods for accessing a real-time transcript, both easy to integrate with, but each designed for different use-cases. In my case the Notifications API was an obvious fit, as I will explain below.

AWS EventBridge Integration

Genesys Cloud’s Amazon EventBridge integration can deliver real-time data, from an ever growing list of topics, to EventBridge in your AWS account. From there you can filter and route data wherever you like.

This approach is great if you want to process utterances in near real-time for analytical tasks (and other fun use-cases) but wasn't the right fit for what I was doing.

Notifications API

The Notifications API, with its support for delivering real-time events via WebSockets was perfect for my use-case, as it offers:

Events scoped to the agent that is authenticated

Low-latency. In my experience, this latency seemed to improve to 2 to 4 seconds around the same time Agent Assist was introduced - though this may be coincidental.

Iteration 1. Utilising the Embeddable Framework

After having made the decision to focus on the Notification API for streaming the real-time transcript of the agent/customer call, I prototyped the simplest solution based on the existing setup.

The simplest prototype I could come up with was:

Agent navigates to the UI

Genesys Cloud's Embedded Framework authenticates the agent against an OAuth Client

The auth token from the Embedded Framework is shared with my UI

My UI uses this token to talk to Genesys Cloud's Notification API and establish a WebSocket connection

It then listens for Conversation Started events from the Embedded Framework to determine when an agent starts a call that needs to be transcribed

Upon a call starting, my code requests the channel subscribe to the call’s live transcript

Iteration 2. Decoupling authentication

In the first iteration I chose to share the token for the Embedded Framework with my UI's code. But this is problematic...

The problem

In this initial prototype there were two problems, which had to be fixed before moving forward:

I'd coupled my solution's authentication flow to Genesys Embedded Framework

I had to share the token from Genesys Embedded Framework to my code (cross-origin), which increased the risk of it being exposed

At the code level this involved acquiring the token from getAuthToken inside the framework.js, then posting it to the window, which my UI could then receive:

window.PureCloud.subscribe([{

type: "UserAction",

callback: function(category) {

if (category === 'login') {

window.PureCloud.User.getAuthToken(function(accessToken) {

// Security risk

window.parent.postMessage({accessToken}, targetOrigin);

});

}

}

}]);Implementing this outside of the prototype could have exposed the access token to any JavaScript running in the parent window, along with other security concerns.

The solution

Both decoupling the auth flow and sharing the token were solved by having my solution manage its own authentication.

Implementing your own login flow for Genesys Cloud is as easy as creating an OAuth Client and calling their Platform API Client SDK:

const apiClient = platformClient.ApiClient.instance;

apiClient

.loginPKCEGrant(clientId, redirectUri)

.then((authData) => {

// ...

}).catch((error) => {

// ...

})Iteration 3. Preventing refreshes from losing utterances

One interesting problem I encountered was that transcription events were missed when agents refreshed their browser.

The reason was simple, during the page refresh the WebSocket connection to the Notification API was closed, then in spite of me promptly reestablishing it on the page loading there is a brief window where I am not subscribed to the topic. Any utterance sent during this period is lost, with no way of being replayed.

The solution happened to be a popup window…

Having wondered how the Genesys softphone maintained its audio connection I realised the obvious, it has a popup window, which survives any refresh of the page containing the softphone.

The code refactor for this change was big, and involved me shifting all the logic for authentication and Notification API subscription into the popup window.

The UI was now very thin, and was just receiving utterances emitted from the popup window via a Broadcast Channel - possible since they're in the same origin:

/* Within popup */

// Create channel

const channel = new BroadcastChannel("transcription-events");

// ...

// Post events from Notification API to channel

this.ws.onmessage = (event: any) => {

// ... validate and transform payload ...

channel.postMessage({

type: "transcription-updated",

data: transcriptionUpdate

});

};There was also logic written to monitor whether a popup window was already open, so I didn't open multiple of them.

What if my UI and Popup were on different domains?

The Broadcast Channel above only works because the UI and popup are on the same domain. If they weren't then they would be considered 'cross-origin', resulting in a lot more restrictions by the browser in how they can communicate. In this case I would have relied on the much inferior postMessage call.

/* Within UI */

// Open popup

const popupRef = window.open(

popupUrl,

popupName,

'width=450px,height=450px,status,opener',

);

// Send message to popup

popupRef.postMessage(event, origin);

// Receive messages from popup

window.addEventListener('message', (event: MessageEvent) => {

// ... Validate event.origin ...

// ... Validate payload ...

processMessageFromPopup(event);

});Conclusion

This was a fairly brief look into the iterations that went into building a resilient and secure real-time transcript feature based on Genesys Cloud's Notification API.

I hope that in spite of its brevity, it was of some interest. It was certainly cathartic to write!

If anything here sparked an idea or improvement then I'd love to know! I’m also very happy to answer any questions you may have, just ping me on LinkedIn.

![The diagram shows the interaction between Genesys Cloud and a website. It begins with a login window and OAuth client in Genesys Cloud authenticating with the Genesys Embedded Framework on the website. Once authenticated, a Genesys softphone starts a conversation. A WebSocket connection is created from the website to the Genesys Notification API, and the website subscribes to a transcription topic (v2.conversation.[conversation-id].transcript). Live transcription data is sent to the website’s code and displayed in a transcription UI. The diagram shows the interaction between Genesys Cloud and a website. It begins with a login window and OAuth client in Genesys Cloud authenticating with the Genesys Embedded Framework on the website. Once authenticated, a Genesys softphone starts a conversation. A WebSocket connection is created from the website to the Genesys Notification API, and the website subscribes to a transcription topic (v2.conversation.[conversation-id].transcript). Live transcription data is sent to the website’s code and displayed in a transcription UI.](https://substackcdn.com/image/fetch/$s_!bQZR!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fc2be3d90-cd14-43e1-9cf8-189da55b9f2e_987x506.png)

Really interesting, do you mind share the full code ?